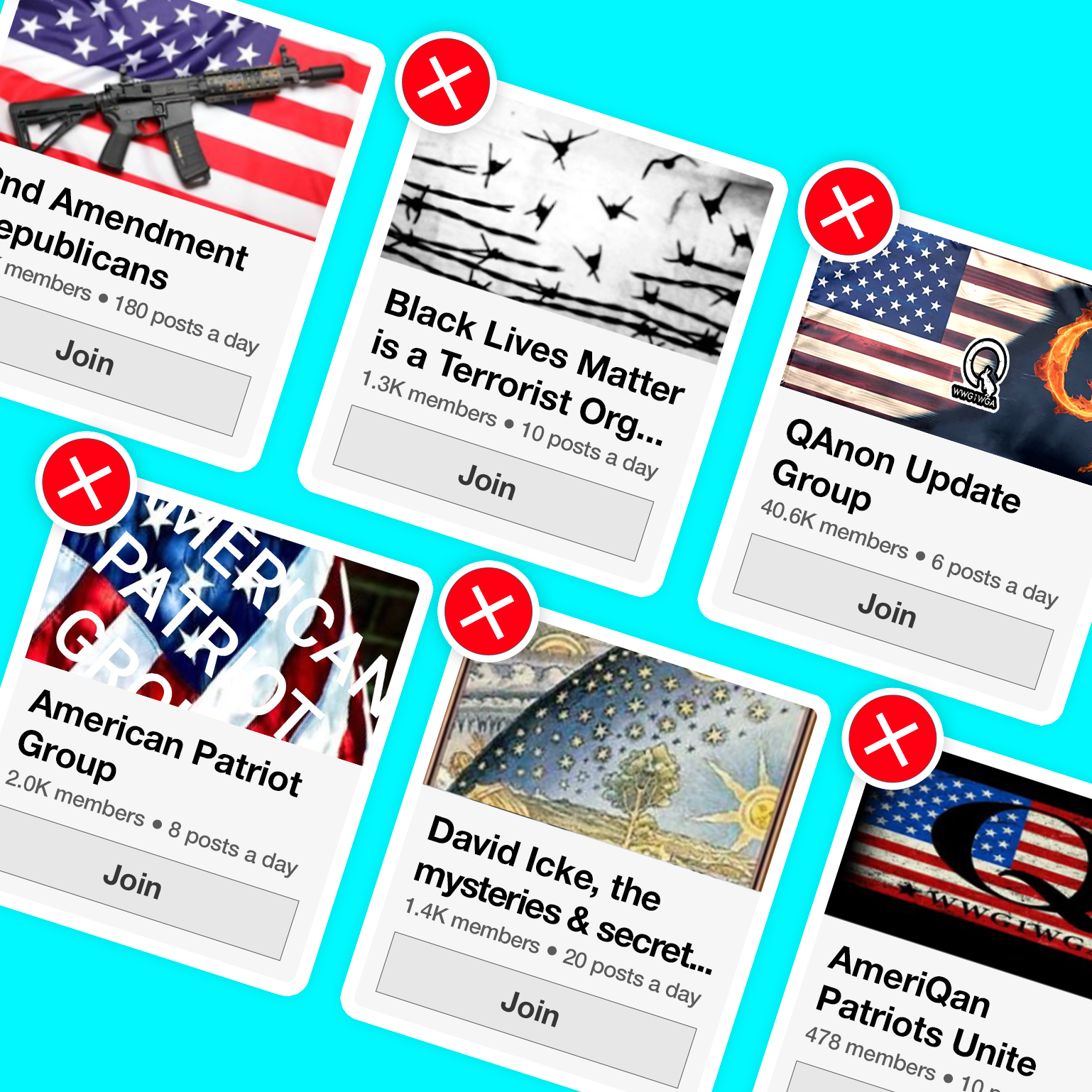

Facebook Groups pose a singular threat to this election season. They’ve become hidden breeding grounds for disinformation campaigns and organizing platforms for extremists. And Facebook’s own AI actively grows these dangerous networks by promoting them to vulnerable users.

“64% of all extremist group joins are due to our recommendation tools”

In 2016, foreign adversaries exploited Facebook Groups to sow chaos and inflame tensions around the election, and domestic bad actors have followed suit. In 2020, we’ve already seen private Groups being used to promote political violence, seed conspiracy theories about mail-in ballots, and push hoaxes to incite local vigilantes.

Facebook has prioritized Groups in recent years despite red flags from their own researchers and incessant warnings from experts. The real-world consequences have been devastating: they’ve turbocharged viral conspiracy theories about coronavirus; mainstreamed violent extremist movements; created echo chambers of racism, and more.

As Facebook concluded themselves, “our recommendation systems grow the problem.” While they ostensibly have “baseline standards” to prevent this, even explicit promises to stop recommending clear categories of Groups like QAnon and Boogaloo have repeatedly proven empty.

Election season is already upon us. The potential to weaponize Groups to spread disinformation, suppress voters, organize intimidation efforts, or incite violence will only grow.