With misinformation undermining the response to coronavirus, inflaming societal tensions, and threatening democracy, voters expect and demand more from social media platforms. Aside from deeply unfavorable views of the social media giants and their leaders, voters have strong concerns about their trustworthiness, a lack of confidence in their plans to protect users from election misinformation, and overwhelming support for erecting new guardrails this election season.

GQR conducted a 20-minute online survey among 1,000 Registered Voters nationwide from September 10-14, 2020. Respondents were contacted from a panel sample of nationwide registered voters. Because the sample is based on those who initially self-selected for participation in the panel rather than a probability sample, no estimates of sampling error can be calculated. All sample surveys and polls may be subject to multiple sources of error, including, but not limited to, sampling error, coverage error, and measurement error. If this poll were conducted among a probability sample, then the margin of error would be of +/- 3.1 percentage points at the 95 percent confidence interval; the margin of error is higher among subgroups. The data are statistically weighted to ensure the sample’s regional, age, gender and partisan composition reflects that of the estimated Registered Voters nationwide.

Key Findings

A majority of voters (52 percent) support shutting social media platforms down altogether for the week of the election. That sentiment is bipartisan: 54 percent of Democrats and 51 percent of Republicans support shutting down social media platforms for election week. This was a surprising finding that illustrates how deep mistrust of social media platforms, and their misinformation policies, runs.

Four in five voters (79 percent) say social media companies need to do more to protect democracy. Just 14 percent of voters are extremely (6 percent) or very (8 percent) confident in social media platforms to prevent the misuse of their platforms to influence the election. 62 percent of voters are not very (30 percent) or not at all (32 percent) confident in the platforms.

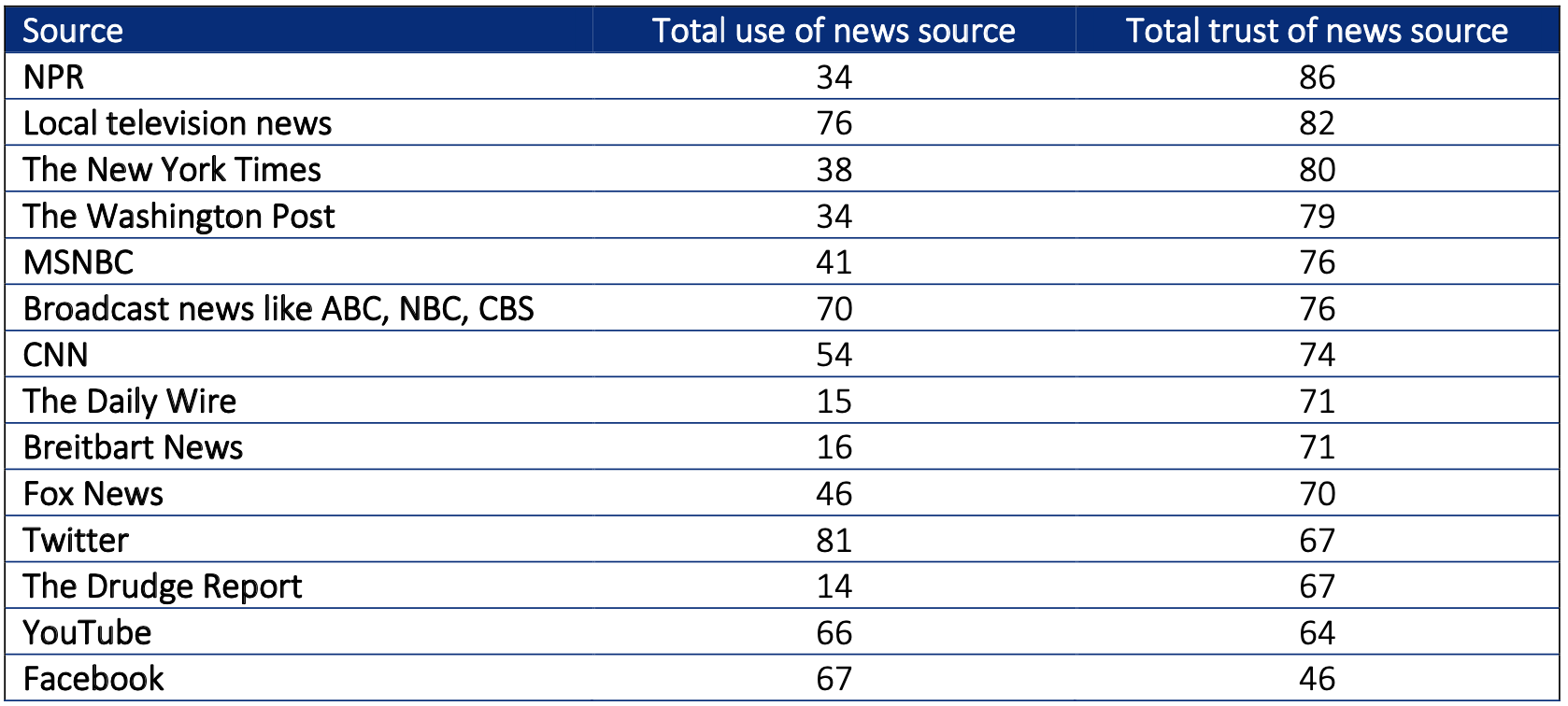

Facebook is widely used and also the least trusted platform for news. Facebook is the most used social media site among voters with nearly two thirds (65 percent) reporting they use the platform and two thirds of Facebook users get news from it. Despite the broad usage of Facebook, half of voters (52 percent) hold unfavorable views about the site and four in ten Facebook users find the platform unfavorable. Notably, Facebook dropped in net favorability from -4 to -15 in just six weeks since our last poll.

Most importantly, Facebook is the least trusted news source (only 18 percent completely or mostly trust) when put against other social media platforms and traditional sources like broadcast and newspapers.

Voters do not have confidence that social media sites will prevent the misuse of their platform. Nearly two thirds of voters (62 percent) say they are not confident in social media platforms to prevent election misinformation influencing the election this November. Tech companies like Google enjoy a small majority of confidence (51 percent confident), but four in ten voters say they have no confidence at all in social media platforms like Facebook (42 percent) , Instagram (40 percent), and Twitter (43 percent).

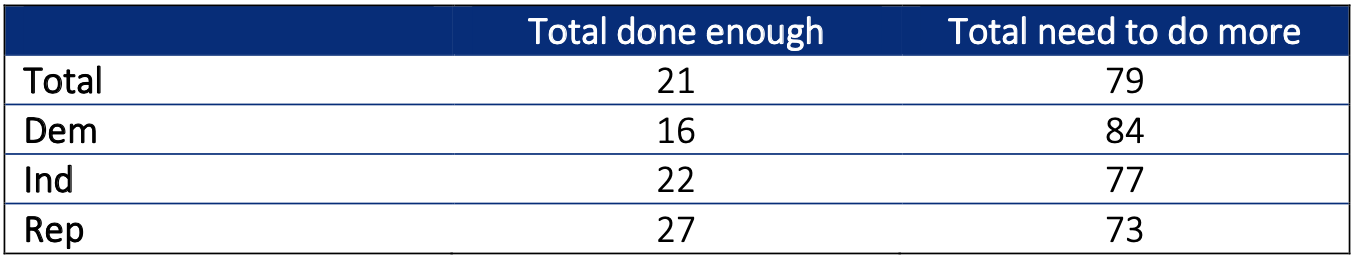

Voters think social media platforms should do more. Nearly all voters (91 percent) believe that social media sites are responsible for the spread of election misinformation and over a third (38 percent) of voters say they are mostly responsible. Responsibility of a problem comes with the responsibility of a solution. Over three quarters (79 percent) of voters say that social media platforms need to do more to protect democracy. The call to action is across partisan silos with strong majorities of both Democrats (85 percent) and Republicans (73 percent) asking social media platforms to do more to protect election integrity and democracy.

Voters want accurate information and quick action from social media platforms. Voters do not mind delays for more accurate information. Two thirds (64 percent) say they rather posts have a small delay to be checked for accuracy rather than having an inaccurate post being public at all. Additionally, generic labels and warnings are not enough for voters.

Currently, Facebook has a flag on all posts about voting and the November election that encourages users to fact check sources no matter the accuracy of the post. They also will add a generic label on any post from a candidate or campaign that declares victory or loss before the final election results are in that will point to official election results, without stating whether or not the information is true or false. Voters broadly think this policy will be effective (60 percent) but do so weakly (17 percent say very effective). Around three quarters of voters (71 percent) rather a post be completely taken down than it to just be flagged as wrong. Over a third of voters strongly believe that if a candidate or campaign declares victory before final results are in, it should be immediate removed.

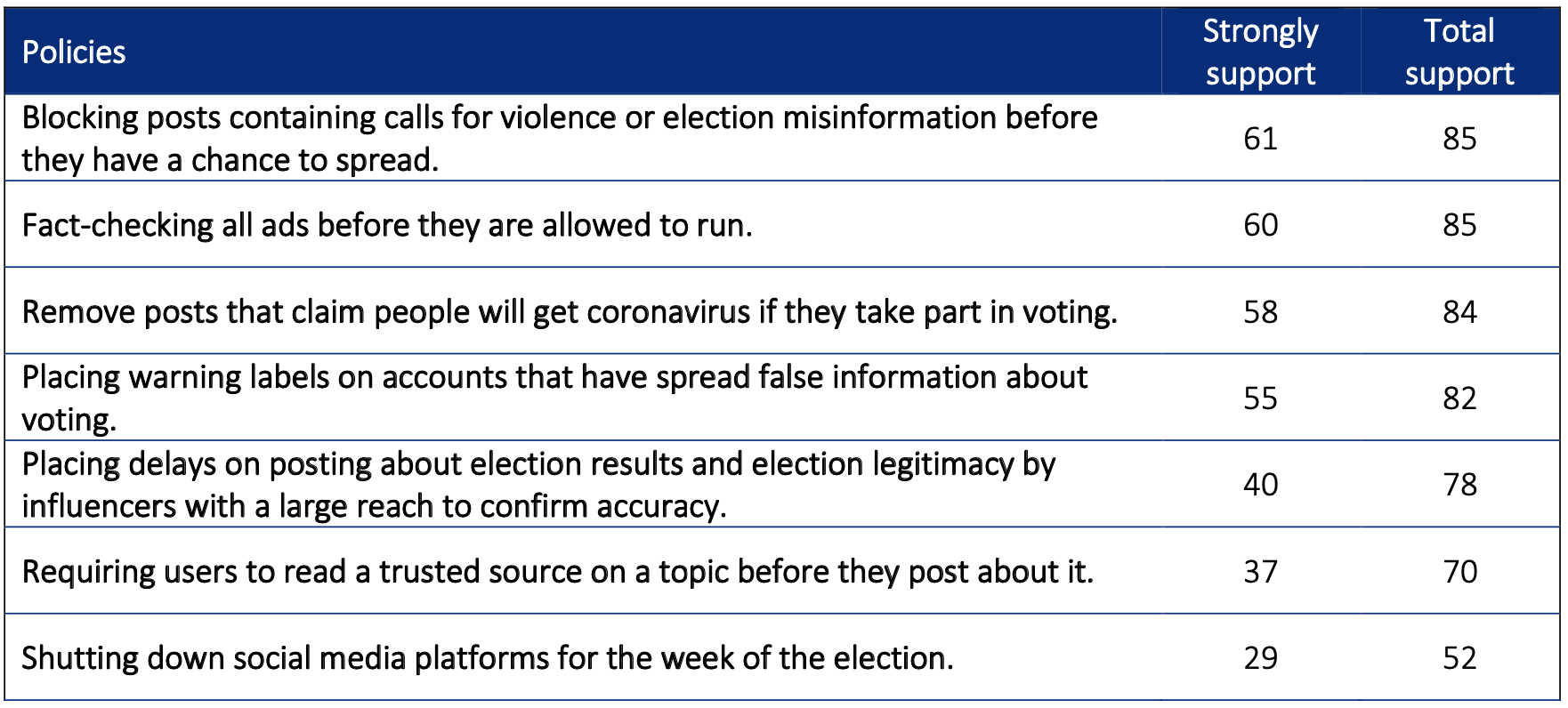

Voters overwhelmingly support a wide range of policies to curb misinformation about democratic processes, many of which were proposed in Accountable Tech’s new Election Integrity Roadmap – including:

- 82 percent support (55 percent strongly support) placing warning labels on accounts that have spread false information about voting.

- 85 percent support (61 percent strongly support) blocking posts containing calls for violence or election misinformation before they have a chance to spread.

Accountable Tech’s proposed Election Integrity Strike System outlines tiered penalties to progressively limit the reach and impact of serial disinformers, defanging the worst actors before the most volatile period of the election season.

Accountable Tech’s proposed Violence Prevention Preclearance system calls for automatically flagging election-related posts from the highest-reach accounts (after polls close, at least) for rapid human review to preempt content that would violate violence incitement or civic integrity policies before it’s published.

The following policies are the most strongly supported.

- Blocking posts containing calls for violence or election misinformation before they have a chance to spread.

- Fact checking all ads (or all political ads) before they are allowed to run.

-

Place warning labels on account that have spread false information about voting.

The above policies reflect voters’ desire for social media platforms to (1) take quick and bold action against election misinformation spreaders and (2) provide accurate information on the platform. Policies that punish and identify accounts that spread false information are among the most popular—voters want inaccurate posts to be blocked and accounts with a pattern of bad behavior to be flagged. Ads on social media are contentious and six in ten voters say they strongly support fact checking every single ad on social media before it is allowed to run to prevent misinformation.